Lava-DL Bootstrap

Rate based SNNs generated by ANN-SNN conversion methods require long runtimes to achieve high accuracy because the rate-based approximation of the ReLU activation unit breaks down for short runtimes.

lava.lib.dl.bootstrap accelerates rate coded Spiking Neural Network (SNN) training by dynamically estimating the equivalent ANN transfer function of a spiking layer with a picewise linear model at regular epochs interval and using the ANN equivlent network to train the original SNN.

Highlight features

Accelerated rate coded SNN training.

Low latency inference of trained SNN made possible by close modeling of equivalent ANN dynamics.

Hybrid training with a mix of SNN layers and ANN layers for minimal drop in SNN accuracy.

Scheduler for seamless switching between different bootstrap modes.

Bootstrap Training

The underlying principle for ANN-SNN conversion is that the ReLU activation function (or similar form) approximates the firing rate of an LIF spiking neuron. Consequently, an ANN trained with ReLU activation can be mapped to an equivalent SNN with proper scaling of weights and thresholds. However, as the number of time-steps reduces, the alignment between ReLU activation and LIF spiking rate falls apart mainly due to the following two reasons (especially, for discrete-in-time models like Loihi’s CUBA LIF):

fit

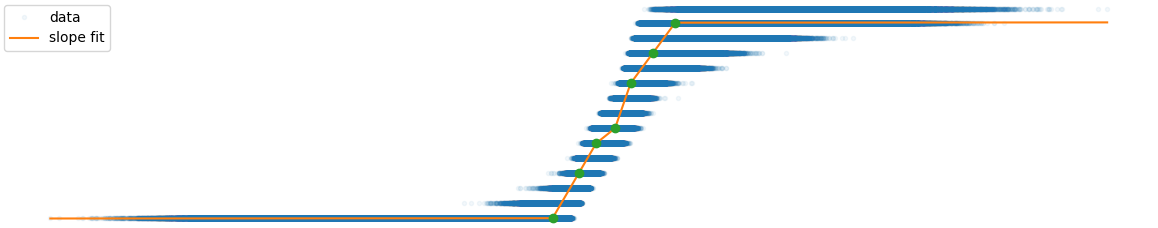

With less time steps, the SNN can assume only a few discrete firing rates.

Limited time steps mean that the spiking neuron activity rate often saturates to maximum allowable firing rate.

In Bootstrap training. An SNN is used to jumpstart an equivalent ANN model which is then used to accelerate SNN training. There is no restriction on the type of spiking neuron or it’s reset behavior. It consists of following steps:

Input output data points are first collected from the network running as an SNN: ``bootstrap.mode.SNN``.

The data is used to estimate the corresponding ANN activation as a piecewise linear layer, unique to each layer: ``bootstrap.mode.FIT`` mode.

The training is accelerated using the piecewise linear ANN activation: ``bootstrap.mode.ANN`` mode.

The network is seamlessly translated to an SNN: ``bootstrap.mode.SNN`` mode.

SAMPLE mode and FIT mode are repeated for a few iterations every couple of epochs, thus maintaining an accurate ANN estimate.

Hybridization

The dynamic estimation of ANN activation function may still not be enough to reduce the gap between SNN and it’s equivalent ANN, especially when the inference timesteps are low and the networks grow deep. In such a scenario, one can look at a hybrid approach of directly training a part of the network as SNN layers/blocks while acclearating the rest of the layers/blocks with bootstrap training.

With bootstrap.block interface, some of the layers in the network

can be run in SNN and rest in ANN. We define a crossover layer which

splits layers earlier than it to always SNN and rest to ANN-SNN

bootstrap mode.

Tutorials

Modules

The main modules are

bootstrap.block

It provides lava.lib.dl.slayer.block based network definition

interface.

bootstrap.ann_sampler

It provides utilities for sampling SNN data points and pievewise linear ANN fit.

bootstrap.routine

bootstrap.routine.Scheduler provides an easy scheduling utility to

seamlessly switch between SAMPLING | FIT | ANN | SNN mode. It also

provides ANN-SNN bootstrap hybrid training utility as well

determined by crossover point.