Copyright (C) 2021 Intel Corporation SPDX-License-Identifier: BSD-3-Clause See: https://spdx.org/licenses/

Hierarchical Processes and SubProcessModels

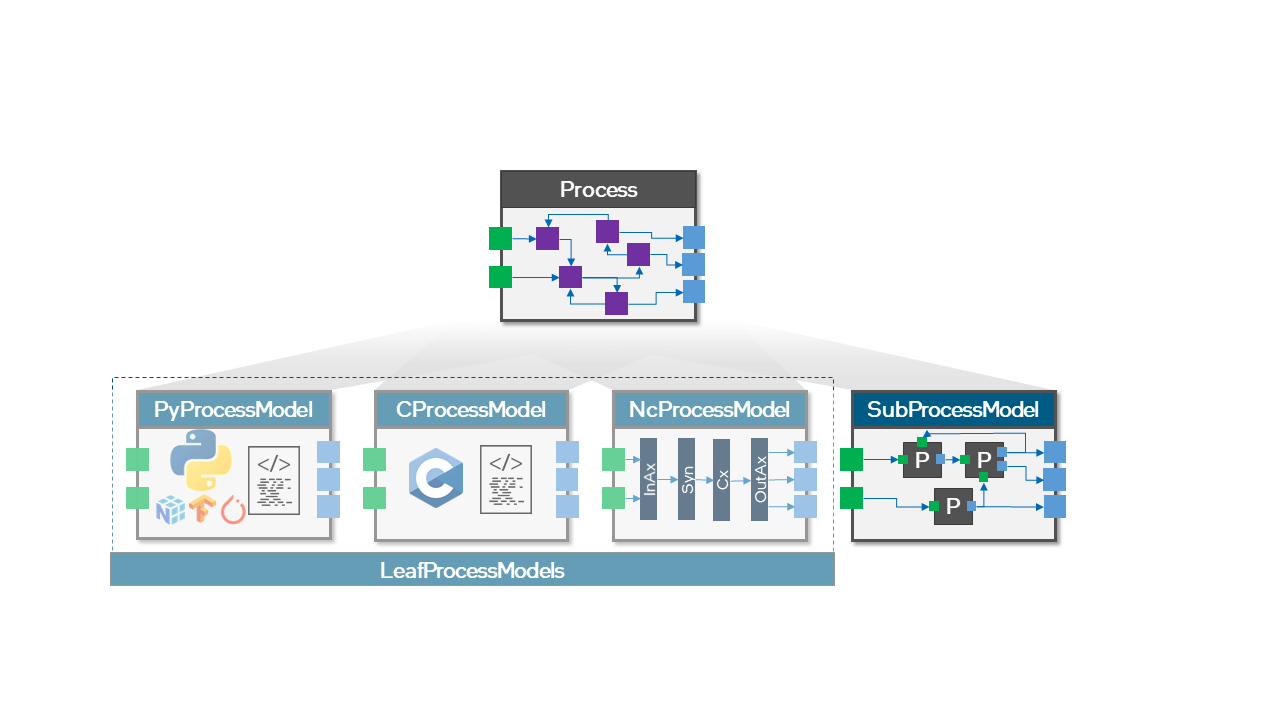

Previous tutorials have briefly covered that there are two categories of ProcessModels: LeafProcessModels and SubProcessModels. The ProcessModel Tutorial explained LeafProcessModels in detail. These implement the behavior of a Process directly, in the language (for example, Python or Loihi Neurocore API) required for a particular compute resource (for example, a CPU or Loihi Neurocores). SubProcessModels, by contrast, allow users to implement and compose the behavior of a process using other processes. This enables the creation of Hierarchical Processes and reuse of primitive ProcessModels to realize more complex ProcessModels. SubProcessModels inherit all compute resource requirements from the sub Processes they instantiate.

In this tutorial, we will create a Dense Layer Hierarchical Process that has the behavior of Leaky-Integrate-and-Fire (LIF) neurons. The Dense Layer ProcessModel implements this behavior via the primitive LIF and Dense Connection Processes and their respective PyLoihiProcessModels.

Recommended tutorials before starting:

Create LIF and Dense Processes and ProcessModels

The ProcessModel Tutorial walks through the creation of a LIF Process and an implementing PyLoihiProcessModel. Our DenseLayer Process additionally requires a Dense Lava Process and ProcessModel that have the behavior of a dense set of synaptic connections and weights. The Dense Connection Process can be used to connect neural Processes. For completeness, we’ll first briefly show an example LIF and Dense Process and PyLoihiProcessModel.

Create a Dense connection Process

[1]:

from lava.magma.core.process.process import AbstractProcess

from lava.magma.core.process.variable import Var

from lava.magma.core.process.ports.ports import InPort, OutPort

class Dense(AbstractProcess):

"""Dense connections between neurons.

Realizes the following abstract behavior:

a_out = W * s_in

"""

def __init__(self, **kwargs):

super().__init__(**kwargs)

shape = kwargs.get("shape", (1, 1))

self.s_in = InPort(shape=(shape[1],))

self.a_out = OutPort(shape=(shape[0],))

self.weights = Var(shape=shape, init=kwargs.pop("weights", 0))

Create a Python Dense connection ProcessModel implementing the Loihi Sync Protocol and requiring a CPU compute resource

[2]:

import numpy as np

from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol

from lava.magma.core.model.py.ports import PyInPort, PyOutPort

from lava.magma.core.model.py.type import LavaPyType

from lava.magma.core.resources import CPU

from lava.magma.core.decorator import implements, requires

from lava.magma.core.model.py.model import PyLoihiProcessModel

@implements(proc=Dense, protocol=LoihiProtocol)

@requires(CPU)

class PyDenseModel(PyLoihiProcessModel):

s_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, bool)

a_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, float)

weights: np.ndarray = LavaPyType(np.ndarray, float)

def run_spk(self):

s_in = self.s_in.recv()

a_out = self.weights[:, s_in].sum(axis=1)

self.a_out.send(a_out)

Create a LIF neuron Process

[3]:

from lava.magma.core.process.process import AbstractProcess

from lava.magma.core.process.variable import Var

from lava.magma.core.process.ports.ports import InPort, OutPort

class LIF(AbstractProcess):

"""Leaky-Integrate-and-Fire (LIF) neural Process.

LIF dynamics abstracts to:

u[t] = u[t-1] * (1-du) + a_in # neuron current

v[t] = v[t-1] * (1-dv) + u[t] + bias_mant # neuron voltage

s_out = v[t] > vth # spike if threshold is exceeded

v[t] = 0 # reset at spike

Parameters

----------

du: Inverse of decay time-constant for current decay.

dv: Inverse of decay time-constant for voltage decay.

bias: Neuron bias.

vth: Neuron threshold voltage, exceeding which, the neuron will spike.

"""

def __init__(self, **kwargs):

super().__init__(**kwargs)

shape = kwargs.get("shape", (1,))

du = kwargs.pop("du", 0)

dv = kwargs.pop("dv", 0)

bias_mant = kwargs.pop("bias_mant", 0)

vth = kwargs.pop("vth", 10)

self.shape = shape

self.a_in = InPort(shape=shape)

self.s_out = OutPort(shape=shape)

self.u = Var(shape=shape, init=0)

self.v = Var(shape=shape, init=0)

self.du = Var(shape=(1,), init=du)

self.dv = Var(shape=(1,), init=dv)

self.bias_mant = Var(shape=shape, init=bias_mant)

self.vth = Var(shape=(1,), init=vth)

Create a Python LIF neuron ProcessModel implementing the Loihi Sync Protocol and requiring a CPU compute resource

[4]:

import numpy as np

from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol

from lava.magma.core.model.py.ports import PyInPort, PyOutPort

from lava.magma.core.model.py.type import LavaPyType

from lava.magma.core.resources import CPU

from lava.magma.core.decorator import implements, requires

from lava.magma.core.model.py.model import PyLoihiProcessModel

@implements(proc=LIF, protocol=LoihiProtocol)

@requires(CPU)

class PyLifModel(PyLoihiProcessModel):

a_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, float)

s_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, bool, precision=1)

u: np.ndarray = LavaPyType(np.ndarray, float)

v: np.ndarray = LavaPyType(np.ndarray, float)

bias_mant: np.ndarray = LavaPyType(np.ndarray, float)

du: float = LavaPyType(float, float)

dv: float = LavaPyType(float, float)

vth: float = LavaPyType(float, float)

def run_spk(self):

a_in_data = self.a_in.recv()

self.u[:] = self.u * (1 - self.du)

self.u[:] += a_in_data

self.v[:] = self.v * (1 - self.dv) + self.u + self.bias_mant

s_out = self.v >= self.vth

self.v[s_out] = 0 # Reset voltage to 0

self.s_out.send(s_out)

Create a DenseLayer Hierarchical Process that encompasses Dense and LIF Process behavior

Now, we create a DenseLayer Hierarchical Process combining LIF neural Processes and Dense connection Processes. Our Hierarchical Process contains all of the variables (u, v, bias, du, dv and vth) native to the LIF Process plus the weights variable native to the Dense Process. The InPort to our Hierarchical Process is s_in, which represents the spike inputs to our Dense synaptic connections. These Dense connections synapse onto a population of LIF

neurons. The OutPort of our Hierarchical Process is s_out, which represents the spikes output by the layer of LIF neurons. We do not have to define the PortOut of the Dense Process nor the PortIn of the LIF Process in the DenseLayer Process, as they are only used internally and won’t be exposed.

[5]:

class DenseLayer(AbstractProcess):

"""Combines Dense and LIF Processes.

"""

def __init__(self, **kwargs):

super().__init__(**kwargs)

shape = kwargs.get("shape", (1, 1))

du = kwargs.pop("du", 0)

dv = kwargs.pop("dv", 0)

bias_mant = kwargs.pop("bias_mant", 0)

bias_exp = kwargs.pop("bias_exp", 0)

vth = kwargs.pop("vth", 10)

weights = kwargs.pop("weights", 0)

self.s_in = InPort(shape=(shape[1],))

self.s_out = OutPort(shape=(shape[0],))

self.weights = Var(shape=shape, init=weights)

self.u = Var(shape=(shape[0],), init=0)

self.v = Var(shape=(shape[0],), init=0)

self.bias_mant = Var(shape=(shape[0],), init=bias_mant)

self.du = Var(shape=(1,), init=du)

self.dv = Var(shape=(1,), init=dv)

self.vth = Var(shape=(1,), init=vth)

Create a SubProcessModel that implements the DenseLayer Process using Dense and LIF child Processes

Now, we will create the SubProcessModel that implements our DenseLayer Process. This inherits from the AbstractSubProcessModel class. Recall that SubProcessModels also inherit the compute resource requirements from the ProcessModels of their child Processes. In this example, we will use the LIF and Dense ProcessModels requiring a CPU compute resource that were defined earlier in the tutorial, and SubDenseLayerModel will therefore implicitly require the CPU compute resource.

The __init__() constructor of SubDenseLayerModel builds the sub Process structure of the DenseLayer Process. The DenseLayer Process gets passed to the __init__() method via the proc attribute. The __init__() constructor first instantiates the child LIF and Dense Processes. Initial conditions of the DenseLayer Process, which are required to instantiate the child LIF and Dense Processes, are accessed through proc.proc_params.

We then connect() the in-port of the Dense child Process to the in-port of the DenseLayer parent Process and the out-port of the LIF child Process to the out-port of the DenseLayer parent Process. Note that ports of the DenseLayer parent process are accessed using proc.in_ports or proc.out_ports, while ports of a child Process like LIF are accessed using self.lif.in_ports and self.lif.out_ports. Our ProcessModel also internally connect()s the

out-port of the Dense connection child Process to the in-port of the LIF neural child Process.

The alias() method exposes the variables of the LIF and Dense child Processes to the DenseLayer parent Process. Note that the variables of the DenseLayer parent Process are accessed using proc.vars, while the variables of a child Process like LIF are accessed using self.lif.vars. Note that unlike a LeafProcessModel, a SubProcessModel does not require variables to be initialized with a specified data type or precision. This is because the data types and precisions

of all DenseLayer Process variables (proc.vars) are determined by the particular ProcessModels chosen by the Run Configuration to implement the LIF and Dense child Processes. This allows the same SubProcessModel to be used flexibly across multiple languages and compute resources when the child Processes have multiple ProcessModel implementations. SubProcessModels thus enable the composition of complex applications agnostic of platform-specific implementations. In this

example, we will implement the LIF and Dense Processes with the PyLoihiProcessModels defined earlier in the tutorial, so the DenseLayer variables aliased from LIF and Dense implicity have type LavaPyType and precisions as specified in PyLifModel and PyDenseModel.

[6]:

import numpy as np

from lava.proc.dense.process import Dense

from lava.magma.core.model.sub.model import AbstractSubProcessModel

from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol

from lava.magma.core.decorator import implements

@implements(proc=DenseLayer, protocol=LoihiProtocol)

class SubDenseLayerModel(AbstractSubProcessModel):

def __init__(self, proc):

"""Builds sub Process structure of the Process."""

# Instantiate child processes

# The input shape is a 2D vector (shape of the weight matrix).

shape = proc.proc_params.get("shape", (1, 1))

weights = proc.proc_params.get("weights", (1, 1))

bias_mant = proc.proc_params.get("bias_mant", (1, 1))

vth = proc.proc_params.get("vth", (1, 1))

self.dense = Dense(weights=weights)

self.lif = LIF(shape=(shape[0], ), bias_mant=bias_mant, vth=vth)

# Connect the parent InPort to the InPort of the Dense child-Process.

proc.in_ports.s_in.connect(self.dense.in_ports.s_in)

# Connect the OutPort of the Dense child-Process to the InPort of the

# LIF child-Process.

self.dense.out_ports.a_out.connect(self.lif.in_ports.a_in)

# Connect the OutPort of the LIF child-Process to the OutPort of the

# parent Process.

self.lif.out_ports.s_out.connect(proc.out_ports.s_out)

proc.vars.u.alias(self.lif.vars.u)

proc.vars.v.alias(self.lif.vars.v)

proc.vars.bias_mant.alias(self.lif.vars.bias_mant)

proc.vars.du.alias(self.lif.vars.du)

proc.vars.dv.alias(self.lif.vars.dv)

proc.vars.vth.alias(self.lif.vars.vth)

proc.vars.weights.alias(self.dense.vars.weights)

Run the DenseLayer Process

Run Connected DenseLayer Processes

[7]:

from lava.magma.core.run_configs import RunConfig, Loihi1SimCfg

from lava.magma.core.run_conditions import RunSteps

from lava.proc.io import sink, source

dim = (3, 3)

# Create the weight matrix.

weights0 = np.zeros(shape=dim)

weights0[1,1]=1

weights1 = weights0

# Instantiate two DenseLayers.

layer0 = DenseLayer(shape=dim, weights=weights0, bias_mant=4, vth=10)

layer1 = DenseLayer(shape=dim, weights=weights1, bias_mant=4, vth=10)

# Connect the first DenseLayer to the second DenseLayer.

layer0.s_out.connect(layer1.s_in)

print('Layer 1 weights: \n', layer1.weights.get(),'\n')

print('\n ----- \n')

rcfg = Loihi1SimCfg(select_tag='floating_pt', select_sub_proc_model=True)

for t in range(9):

# Run the entire network of Processes.

layer1.run(condition=RunSteps(num_steps=1), run_cfg=rcfg)

print('t: ',t)

print('Layer 0 v: ', layer0.v.get())

print('Layer 1 u: ', layer1.u.get())

print('Layer 1 v: ', layer1.v.get())

#print('Layer 1 spikes: ', layer1.spikes.get())

print('\n ----- \n')

layer1.stop()

Layer 1 weights:

[[0. 0. 0.]

[0. 1. 0.]

[0. 0. 0.]]

-----

t: 0

Layer 0 v: [4. 4. 4.]

Layer 1 u: [0. 0. 0.]

Layer 1 v: [4. 4. 4.]

-----

t: 1

Layer 0 v: [8. 8. 8.]

Layer 1 u: [0. 0. 0.]

Layer 1 v: [8. 8. 8.]

-----

t: 2

Layer 0 v: [0. 0. 0.]

Layer 1 u: [0. 0. 0.]

Layer 1 v: [0. 0. 0.]

-----

t: 3

Layer 0 v: [4. 4. 4.]

Layer 1 u: [0. 1. 0.]

Layer 1 v: [4. 5. 4.]

-----

t: 4

Layer 0 v: [8. 8. 8.]

Layer 1 u: [0. 1. 0.]

Layer 1 v: [8. 0. 8.]

-----

t: 5

Layer 0 v: [0. 0. 0.]

Layer 1 u: [0. 1. 0.]

Layer 1 v: [0. 5. 0.]

-----

t: 6

Layer 0 v: [4. 4. 4.]

Layer 1 u: [0. 2. 0.]

Layer 1 v: [4. 0. 4.]

-----

t: 7

Layer 0 v: [8. 8. 8.]

Layer 1 u: [0. 2. 0.]

Layer 1 v: [8. 6. 8.]

-----

t: 8

Layer 0 v: [0. 0. 0.]

Layer 1 u: [0. 2. 0.]

Layer 1 v: [0. 0. 0.]

-----

How to learn more?

Learn how to access memory from other Processes in the Remote Memory Access Tutorial.

If you want to find out more about SubProcessModels, have a look at the Lava documentation or dive into the source code.

To receive regular updates on the latest developments and releases of the Lava Software Framework please subscribe to our newsletter.